, by NCI Staff

Two identical black and white pictures of murky shapes sit side-by-side on a computer screen. On the left side, Ismail Baris Turkbey, M.D., a radiologist with 15 years of experience, has outlined an area where the fuzzy shapes represent what he believes is a creeping, growing prostate cancer. On the other side of the screen, an artificial intelligence (AI) computer program has done the same—and the results are nearly identical.

The black and white image is an MRI scan from someone with prostate cancer, and the AI program has analyzed thousands of them.

“The [AI] model finds the prostate and outlines cancer-suspicious areas without any human supervision,” Dr. Turkbey explains. His hope is that the AI will help less experienced radiologists find prostate cancer when it’s present and dismiss anything that may be mistaken for cancer.

This model is just the tip of the iceberg when it comes to the intersection of artificial intelligence and cancer research. While the potential applications seem endless, a lot of that progress has centered around tools for cancer imaging.

From x-rays of whole organs to microscope pictures of cancer cells, doctors use imaging tests in many ways: finding cancer at its earliest stages, determining the stage of a tumor, seeing if treatment is working, and monitoring whether cancer has returned after treatment.

Over the past several years, researchers have developed AI tools that have the potential to make cancer imaging faster, more accurate, and even more informative. And that’s generated a lot of excitement.

“There’s a lot of hype [around AI], but there’s a lot of research that’s going into it as well,” said Stephanie Harmon, Ph.D., a data scientist in NCI’s Molecular Imaging Branch.

That research, experts say, includes addressing questions about whether these tools are ready to leave research labs and enter doctors’ offices, whether they will actually help patients, and whether that benefit will reach all—or only some—patients.

What is artificial intelligence?

Artificial intelligence refers to computer programs, or algorithms, that use data to make decisions or predictions. To build an algorithm, scientists might create a set of rules, or instructions, for the computer to follow so it can analyze data and make a decision.

For example, Dr. Turkbey and his colleagues used existing rules about how prostate cancer appears on an MRI scan. They then trained their algorithm using thousands of MRI studies—some from people known to have prostate cancer, and some from people who did not.

With other artificial intelligence approaches, like machine learning, the algorithm teaches itself how to analyze and interpret data. As such, machine learning algorithms may pick up on patterns that are not readily discernable to the human eye or brain. And as these algorithms are exposed to more new data, their ability to learn and interpret the data improves.

Researchers have also used deep learning, a type of machine learning, in cancer imaging applications. Deep learning refers to algorithms that classify information in ways much like the human brain does. Deep learning tools use “artificial neural networks” that mimic how our brain cells take in, process, and react to signals from the rest of our body.

Research on AI for cancer imaging

Doctors use cancer imaging tests to answer a range of questions, like: Is it cancer or a harmless lump? If it is cancer, how fast is it growing? How far has it spread? Is it growing back after treatment? Studies suggest that AI has the potential to improve the speed, accuracy, and reliability with which doctors answer those questions.

“AI can automate assessments and tasks that humans currently can do but take a lot of time,” said Hugo Aerts, Ph.D., of Harvard Medical School. After the AI gives a result, “a radiologist simply needs to review what the AI has done—did it make the correct assessment?” Dr. Aerts continued. That automation is expected to save time and costs, but that still needs to be proven, he added.

In addition, AI could make image interpretation—a highly subjective task—more straightforward and reliable, Dr. Aerts noted.

Complex tasks that rely on “a human making an interpretation of an image—say, a radiologist, a dermatologist, a pathologist —that’s where we see enormous breakthroughs being made with deep learning,” he said.

But what scientists are most excited about is the potential for AI to go beyond what humans can currently do themselves. AI can “see” things that we humans can’t, and can find complex patterns and relationships between very different kinds of data.

“AI is great at doing this—at going beyond human performance for a lot of tasks,” Dr. Aerts said. But, in this case, it is often unclear how the AI reaches its conclusion, so it’s difficult for doctors and researchers to check if the tool is performing correctly.

Finding cancer early

Tests like mammograms and Pap tests are used to regularly check people for signs of cancer or precancerous cells that can turn into cancer. The goal is to catch and treat cancer early, before it spreads or even before it forms at all.

Scientists have developed AI tools to aid screening tests for several kinds of cancer, including breast cancer. AI-based computer programs have been used to help doctors interpret mammograms for more than 20 years, but research in this area is quickly evolving.

One group created an AI algorithm that can help determine how often someone should get screened for breast cancer. The model uses a person’s mammogram images to predict their risk of developing breast cancer in the next 5 years. In various tests, the model was more accurate than the current tools used to predict breast cancer risk.

NCI researchers have built and tested a deep learning algorithm that can identify cervical precancers that should be removed or treated. In some low-resource settings, health workers screen for cervical precancer by inspecting the cervix with a small camera. Although this method is simple and sustainable, it is not very reliable or accurate.

Mark Schiffman, M.D., M.P.H., of NCI’s Division of Cancer Epidemiology and Genetics, and his colleagues designed an algorithm to improve the ability to find cervical precancers with the visual inspection method. In a 2019 study, the algorithm performed better than trained experts.

For colon cancer, several AI tools have been shown in clinical trials to improve the detection of precancerous growths called adenomas. However, because only a small percentage of adenomas turn into cancer, some experts are concerned that such AI tools could lead to unnecessary treatments and extra tests for many patients.

Detecting cancer

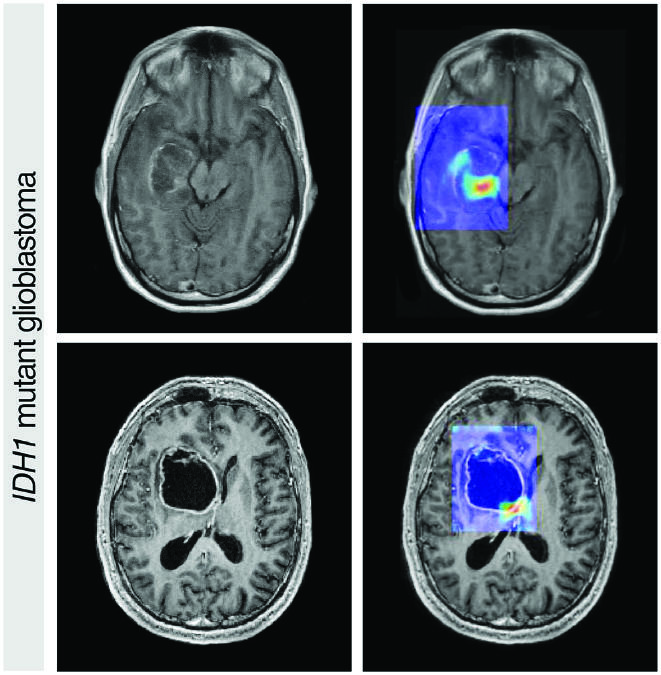

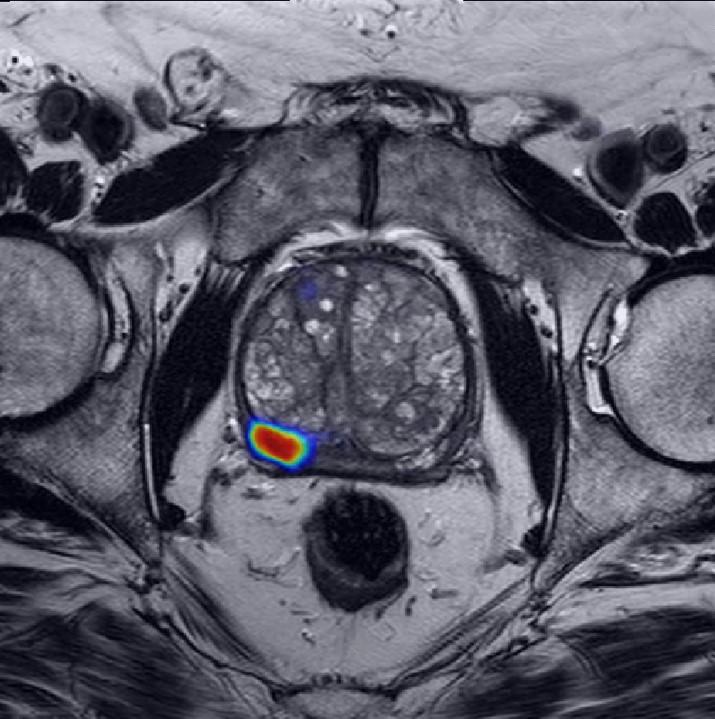

AI has also shown the potential to improve cancer detection in people who have symptoms. The AI model developed by Dr. Turkbey and his colleagues in NCI’s Center for Cancer Research, for instance, could make it easier for radiologists to pick out potentially aggressive prostate cancer on a relatively new kind of prostate MRI scan, called multiparametric MRI.

On a multiparametric MRI scan of a patient’s prostate, a cancer-suspicious area (red) is highlighted by an AI model developed by Dr. Turkbey.

Credit: Courtesy of Stephanie Harmon, Ph.D.

Although multiparametric MRI generates a more detailed picture of the prostate than a regular MRI, radiologists typically need years of practice to read these scans accurately, leading to disagreements between radiologists looking at the same scan.

The NCI team’s AI model “can make [the learning] curve easier for practicing radiologists and can minimize the error rate,” Dr. Turkbey said. The AI model could serve as “a virtual expert” to guide less-experienced radiologists learning to use multiparametric MRI, he added.

For lung cancer, several deep learning AI models have been developed to help doctors find lung cancer on CT scans. Some noncancerous changes in the lungs look a lot like cancer on CT scans, leading to a high rate of false-positive test results that indicate a person has lung cancer when they really don’t.

Experts think that AI may better distinguish lung cancer from noncancerous changes on CT scans, potentially cutting the number of false positives and sparing some people from unneeded stress, follow-up tests, and procedures.

For example, a team of researchers trained a deep learning algorithm to find lung cancer and to specifically avoid other changes that look like cancer. In lab tests, the algorithm was very good at ignoring noncancerous changes that look like cancer and good at finding cancer.

Choosing cancer treatment

Doctors also use imaging tests to get important information about cancer, such as how fast it is growing, whether it has spread, and whether it is likely to come back after treatment. This information can help doctors choose the most appropriate treatment for their patients.

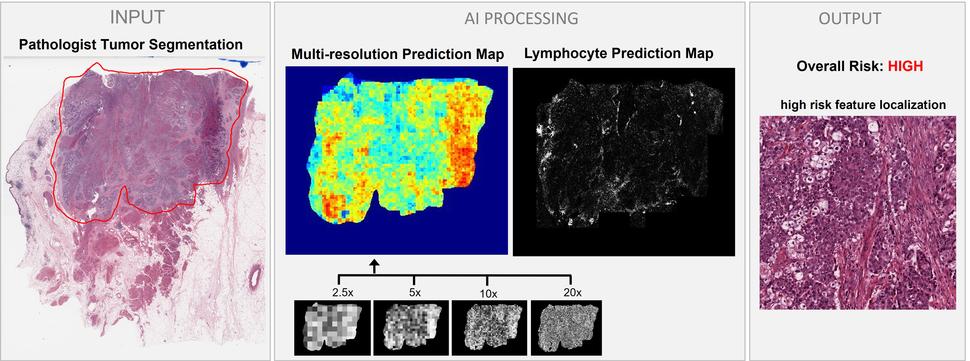

A number of studies suggest that AI has the potential to gather such prognostic information—and maybe even more—from imaging scans, and with greater precision than humans currently can. For example, Dr. Harmon and her colleagues created a deep learning model that can determine the likelihood that a patient with bladder cancer might need other treatments in addition to surgery.

Doctors estimate that around 50% of people with tumors in the bladder muscle (muscle-invasive bladder cancer) have clusters of cancer cells that have spread beyond the bladder but are too small to detect with traditional tools. If these hidden cells aren’t removed, they can continue growing after surgery, causing a relapse.

Chemotherapy can kill these microscopic clusters and prevent the cancer from coming back after surgery. But clinical trials have shown that it’s hard to determine which patients need chemotherapy in addition to surgery, Dr. Harmon said.

“What we would like to do is use this model before patients undergo any sort of treatment, to tell which patients have cancer with a high likelihood of spreading, so doctors can make informed decisions,” she explained.

Dr. Harmon’s AI model uses digital images of a bladder tumor tissue sample (“INPUT” on the left) to predict the risk of the cancer spreading to nearby lymph nodes (“OUTPUT” on the right).

Credit: Courtesy of Stephanie Harmon, Ph.D.

The model looks at digital images of primary tumor tissue to predict whether there are microscopic clusters of cancer in nearby lymph nodes. In a 2020 study, the deep learning model proved to be more accurate than the standard way of predicting whether bladder cancer has spread, which is based on a combination of factors including the patient’s age and certain characteristics of the tumor.

More and more, genetic information about the patients’ cancer is being used to help select the most appropriate treatment. Scientists in China created a deep learning tool to predict the presence of key gene mutations from images of liver cancer tissue—something pathologists can’t do by just looking at the images.

Their tool is an example of AI that works in mysterious ways: The scientists who built the algorithm don’t know how it senses which gene mutations are present in the tumor.

Are AI tools for cancer imaging ready for the real world?

Although scientists are churning out AI tools for cancer imaging, the field is still nascent and many questions about the practical applications of these tools remain unanswered.

While hundreds of algorithms have been proven accurate in early tests, most haven’t reached the next phase of testing that ensures they are ready for the real world, Dr. Harmon said.

That testing, known as external or independent validation, “tells us how generalizable our algorithm is. Meaning, how useful is it on a totally new patient? How does it perform on patients from different [medical] centers or different scanners?” Dr. Harmon explained. In other words, does the AI tool work accurately beyond the data it was trained on?

AI algorithms that pass rigorous validation testing in diverse groups of people from various areas of the world could be used more widely, and therefore help more people, she added.

In addition to validation, Dr. Turkbey noted, clinical studies also need to show that AI tools actually help patients, either by preventing people from getting cancer, helping them live longer or have a better quality of life, or saving them time or money.

But even after that, Dr. Aerts said, a major question about AI is: “How do we make sure that these algorithms keep on working and performing well for years and years?” For example, he said, new scanners could change features of the image that an AI tool relies on to make predictions or interpretations, he explained. And that could change their performance.

There are also questions about how AI tools will be regulated. Upwards of 60 AI-based medical devices or algorithms have earned FDA approval as of 2020. But even after they are approved, some machine learning algorithms shift as they are exposed to new data. In 2021, FDA issued a framework for monitoring AI technologies that have the ability to adapt.

There are also concerns about the transparency of some AI tools. With some algorithms, like the one that can predict gene mutations in liver tumors, scientists don’t know how it reaches its conclusion—a conundrum known as the “black box problem.” Experts say this lack of transparency prohibits critical checks for biases and inaccuracies.

A recent study, for example, showed that a machine learning algorithm trained to predict cancer outcomes zeroed in on the hospital where the tumor image was taken, rather than the patient’s tumor biology. Although that algorithm isn’t used in any medical settings, other tools trained in the same way could have the same inaccuracy, the researchers warned.

There are also worries that AI could worsen gaps in health outcomes between privileged and disadvantaged groups by exacerbating biases that are already baked into our medical system and research processes, said Irene Dankwa-Mullan, M.D., M.P.H., deputy chief health equity officer of IBM Watson Health.

These biases are deeply embedded in the data used to create AI models, she explained at the 2021 American Association for Cancer Research Science of Cancer Health Disparities conference.

For instance, a handful of medical algorithms have recently been shown to be less accurate for Black people than for White people. These potentially dangerous shortcomings stem from the fact that the algorithms were mainly trained and validated on data from White patients, experts have noted.

On the other hand, some experts think AI could improve access to cancer care by bringing expert-level care to hospitals that lack specialists.

“What [AI] can do is, in a setting where there are physicians who maybe don’t have as much expertise, potentially it can bring their performance up to an expert level,” explained Dr. Harmon.

Some AI tools could even bypass the need for sophisticated equipment. The deep learning algorithm for cervical cancer screening developed by Dr. Schiffman, for example, relies on cell phones or digital cameras and low-cost materials.

Despite these concerns, most researchers are optimistic for the future of AI in cancer care. Dr. Aerts, for example, believes these hurdles are surmountable with more work and collaboration between experts in science, medicine, government, and community implementation.

“I think [AI technologies] will eventually be introduced into the clinic because the performance is just too good and it’s a waste if we don’t,” he said.